Zoom, Miro and 500 people walk into a scroll bar, or how to organise an online interactive session for a large group of people.

Last week, Specflow and I organised an interactive webinar. For posterity, and anyone crazy enough to do something similar in the future, here’s a quick summary. Read on to learn how we facilitated it, what worked well, what worked badly, and some ideas how to do it better.

TLDR summary: we got 500 people to try collaborating over Zoom and Miro. Miro more or less held its ground, but I feel as if we broke Zoom. With a few tweaks I’d use Miro again next time, but perhaps look for a different video-conferencing system.

Workshop set-up

The Specflow team wanted to organise a community webinar, and we thought about taking the Given-When-Then With Style community challenges live. In most webinars the audience is passive, and if there is any interaction it’s usually just quick polling. Because the community challenges relied heavily on participation, I proposed to make the webinar truly a participatory experience. Ideally, we wanted to get a huge number of people actually collaborating, working in small groups most of the time. The idea was that people would discuss things in small groups, add stuff to an area of the Miro board, and I would observe and comment on their conclusions.

We ran the teleconference as a Zoom meeting, not as a webinar (more on this later). A nice trick here is to disable participant audio - this prevents people from talking over the speaker in the main room, but does not apply to breakout rooms. They can still collaborate easily once the exercises start.

To cover the risk of too many people registering, the Specflow team proposed to also stream the session to YouTube (this turned out to be an excellent idea, more on that below).

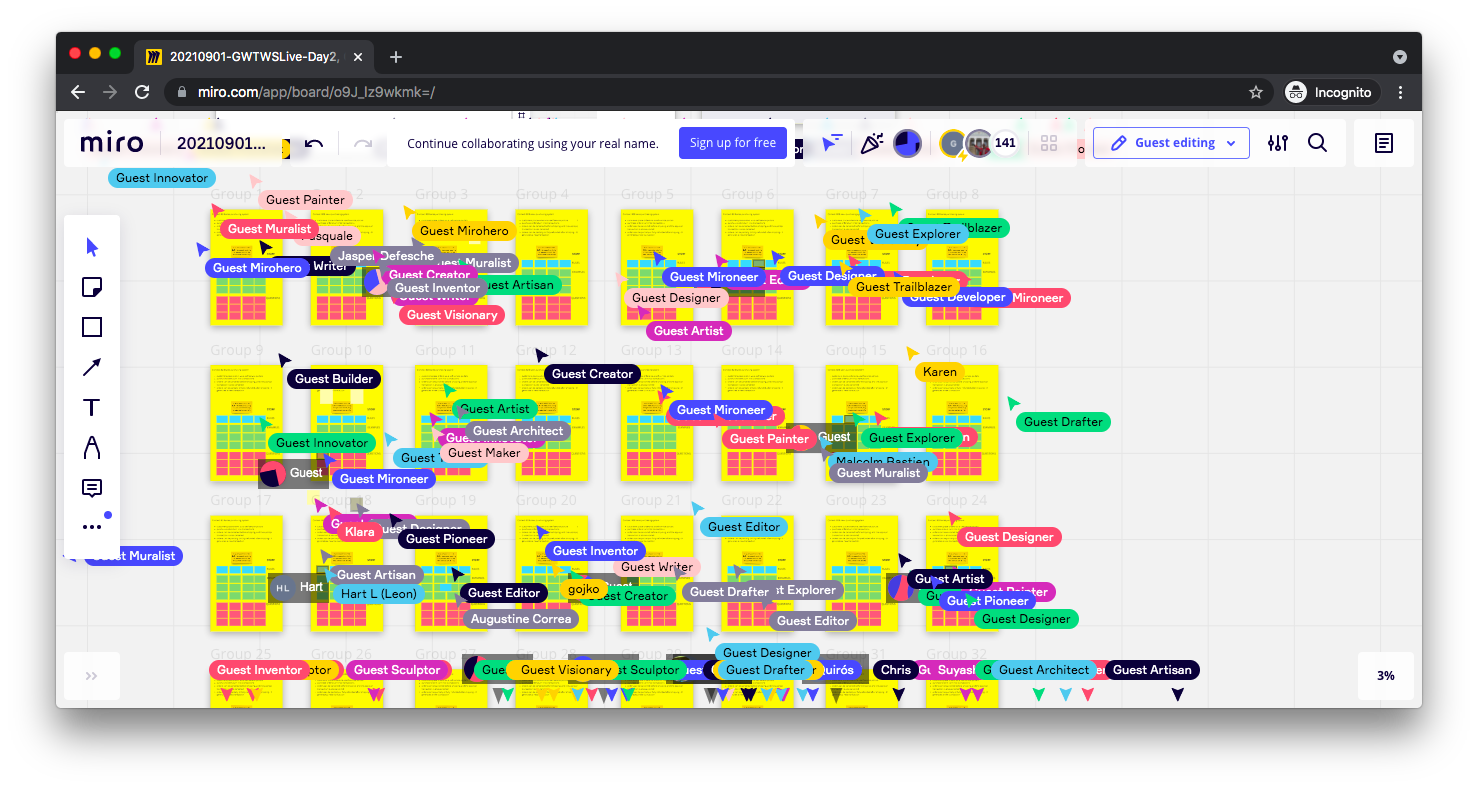

Roughly 1500 people registered. At the end, about 500 people showed up, and got split into 40 rooms (trying to create 50 failed, so in panic I just tried some randomly lower number, and 40 worked). We lost about 100 once the breakout sessions started (more on this also later). 250 came back the second day. The YouTube stream also got some traction, and roughly 40 people switched from active participation to just observing.

I had separate laptops for Miro and Zoom, as a precaution against my processor melting. Maybe this was an overkill, but the laptop with Zoom was also running OBS to replace a green screen with slides, and I didn’t want to take chances. I’m not sure if the meeting size affected teleconference performance or not, but the fans on the machine which was running Zoom and OBS were blowing like mad all the time, so having two separate computers was probably a good idea.

Scaling an online workshop to an interactive webinar

My online workshops usually run for two and a half hours per day. Each day is split into three modules, with short breaks between them. This helps to minimise disruption and teleconference fatigue. Each module starts with a short presentation, followed by breakout room group-work, and a review discussion. The schedule works well with groups up to 30-40 people, so I rather optimistically thought about just scaling it up.

The last part of each module obviously had to change, since opening a discussion for 500 people didn’t seem like a good idea. Instead, I decided to roam around the Miro board pointing out interesting conclusions, and use them as talking points for the stuff I wanted to explain.

I expected the first two parts of each module (presentation and groupwork) to just scale easily. The group size doesn’t really matter for a presentation, and we’d still be dividing people into smaller groups for the breakout sessions. Unfortunately, this is where the problems started. Zoom was randomly kicking people out, and they had to re-connect and wait to be assigned to a room. I noticed this problem on a much smaller scale when running online workshops with a few dozen people, but at large scale this became a huge issue. I had to spend most of the time during the first exercise just readmitting people and reassigning them to meeting rooms, with new people popping up every few seconds. Jordan Western from Specflow is the unsung hero of the story, because he took care of all the Zoom room management from the second breakout onwards, which turned out to be a full-time task. If I were alone, things would have not gone nearly as smoothly. So if you plan to do something as crazy as running a workshop for a few hundred people online, make sure you do not facilitate it alone.

At the end of the first day, people just stayed online when the event ended. I assumed they expected a Q&A session, so we did an impromptu one, and opened participant audio for questions. This was a mistake. Lots of off-topic stuff came up. People were asking questions with a very specific context, which was not feasible or valuable to discuss with a large group. On the second day, we did a facilitated Q&A instead. Jordan was choosing questions from the Zoom chat and Twitter, and the two of us had a conversation about those topics. This allowed much better time management, so I would definitely use facilitated Q&A next time.

Another aspect that needs to change when scaling a 30-person workshop to a 500-people workshop is to reduce context switching. We forced people to use too many different tools - the Specflow Online Gherkin Editor, Miro and Zoom. Each additional tool adds windows, more context and potential friction. People had to work with 3-4 tabs open, and I can only assume those on smaller screens didn’t have a great experience with this. With a smaller session, the facilitator can help people individually if they get stuck of have tooling issues. With a large group, that’s not feasible. Minimising the number of tools would help to reduce moving parts, things that can go wrong. For this specific session we wanted to get people to edit and share Gherkin files and to try example mapping. There’s no single tool that does both well, so I don’t see how we could have reduced the tooling. Next time, maybe being less ambitious and more focused with the program can help. Zoom now has apps, so in theory everything can stay in there and people would just need a single window. But then again, I wouldn’t recommend using Zoom for large groups (more on this below).

How to run large sessions in Miro

Here are five key ideas for running a session with several hundred people on Miro:

- turn off participant cursors

- turn off highlighting on changes

- avoid using sidebars and comments

- make sure people do not move around a lot

- lock everything on the board that people are not supposed to move

The first three items directly affect performance and had to be communicated to participants upfront. Just to avoid confusion, Miro documentation suggests resolving comments quickly, not avoiding them altogether. With such a large group, however, dealing with comments promptly would be impossible for me. That’s why we just asked participants to not use this feature.

There was no option to disable performance-hogging features globally. It would be amazing if some of this stuff could be blocked automatically. With a large group, there is a significant number of those who ignore polite instructions. For example, people still added comments on the board, even for truly operational stuff that needed quick resolution (such as asking to be reassigned to a different breakout room). There is an ongoing Miro wish-list item for board configuration options, so if you’re a Miro user, give it a gentle nudge. Onboarding inexperienced people would be much easier if they can’t break stuff for themselves easily.

To prevent people from moving around a lot, we created isolated areas for individual group.

Each area was technically a separate frame in Miro, and there’s quite a neat trick that Miro automatically increases frame numbers when pasting. So name the first frame “Group 1”, copy and paste it, and the new frame automatically becomes “Group 2”. This works even for bulk pasting, so it was fairly easy to auto-number 50 frames. We just asked people to check the Zoom breakout room number and find the corresponding board area to work on. For the most part, that worked well (some people complained that they didn’t know where to see the Zoom room number, but that’s not a Miro problem).

The major pain-point for Miro newbies in my workshops is usually toggling between edit and move modes. People usually click somewhere expecting to edit, but end up accidentally moving stuff, especially background frames. With small sessions, I can just ask people to undo unintended changes, but at large scale that would not work. Because of that, make sure to lock all background objects and anything that users are not supposed to interact with directly (headers, text, links, frames).

The Miro collaboration part worked surprisingly well. I kind of expected Miro to fall over because we just used a regular team account and created a publicly editable board, but there were no major technical glitches. A few participants complained that someone else was deleting their stuff as they were putting it on Miro. I’m not sure if we hit some performance limits and the objects disappeared due to overload, if someone was maliciously doing it, or if someone just misunderstood where they need to be on the board and did a bit of spring cleaning. In general this was not a big issue, and the Miro collaboration worked smoothly.

There was only once instance when things went a bit crazy, but it was not due to technical performance. Someone threw the whole workshop off by copying the whole Miro board and pasted it slightly shifted to the left, overlapping with the board objects. Could have been a troll, or more likely someone just accidentally doing something. In an ideal world, we’d be able to prevent this, but I don’t see how. Miro currently doesn’t offer any features to lock large-scale board changes. If anyone from the Miro team ever reads this, maybe you could have a setting to prevent people from copy-pasting over locked objects, or locking down activities to just adding/editing stickies, or something like that.

For more tips on running large Miro sessions, check out the official advice on improving board performance. Also, to keep your inbox sane, with a large group make sure to turn off board notifications upfront.

Zoom, zoom, you broke my room

In retrospect, Zoom was probably the wrong choice for this session. Large participatory sessions somehow fell through the cracks on their product map. Zoom webinars are great for large groups watching a single presenter, but do not allow breakout rooms. Zoom meetings allow breakout rooms, but they don’t scale well.

People kept dropping off and rejoining the breakout rooms. For the first exercise, this was partially because of bad expectation management (more on this in the next section). For the other exercises, it definitely had more to do with the Zoom software, flaky wifi, devices losing power or gremlins. Whatever the reason, with a large number of participants, rooms were very difficult to manage. There was no way to just send people to a breakout room if they rejoined during a collaboration session. One of the co-hosts had to manually do this for every participant, and there was a constant stream of people rejoining. There was no way to exclude co-hosts easily from automatic room assignment, so people who needed to help would be thrown out of the main room and had to quit the meeting and rejoin.

An even bigger issue is that there was no easy way to send people back to the same room if they rejoined. Zoom doesn’t automatically show previous assignments. With small workshops, I usually just ask the participants which room they’d like to go to, but with a large group this was not feasible. The constant stream of people rejoining made it impossible to communicate with individual participants and wait for them to respond. Some prompted us in the zoom chat, but you’d need to have experience with Zoom rooms to know that. Most participants did not. Some even posted comments on the Miro board about this, which were impossible to monitor easily. Given the constraints, the best we could do is to randomly send people to rooms, which disrupted collaboration. People would suddenly find themselves in a totally unfamiliar group continuing to work on a different part of the board. Participants didn’t seem to drop off that much during the presentations, though, so this is definitely related to breakout rooms.

There were a few other glitches that seem like Zoom issues. For example, although participant microphones were blocked by the Zoom meeting setup, people occasionally complained that they could hear someone else talking over me, as if another participant was broadcasting audio. The rest of the group could not hear the additional sound, so this seemed as a weird bug. It did, however, happen several times to different people, so it seems like a genuine problem.

If I ever try to do a large session again, I would probably try some other software. I don’t have anything yet to recommend personally, but several people suggested that looking into Hopin. Alternatively, maybe look into one of the platforms for selling online courses. I would expect them to have better group management capabilities. Anyway, if you’re reading this and have a good suggestion you tried out and it worked well, I’d love to hear from you. Please drop me an email about that.

Manage participation expectations upfront

During the first exercise, many people in breakout rooms seemed to be expecting just to listen in instead of participate. From friends who attended the webinar, I’ve heard that they ended up in rooms filled mostly with people on phones, almost nobody having a camera turned on, and very few people wanting to turn on audio. I mostly attribute this to us not managing participant expectations well. Although we tried to communicate that the session will be a participatory workshop, both by email and on the web site, many people obviously didn’t get the message, or didn’t take it seriously.

Starting the first exercise caused a big disruption to the session, when people finally realised that they will need to participate in groupwork. Some participants switched to YouTube streaming. Some just dropped off and didn’t come back. Some people quit and re-joined from laptops. Because of the bad room management capabilities, participants mostly ended up in different groups upon rejoining, causing a significant shift in membership at a time when groups were supposed to discuss and interact. We lost about 100 people, so the groups were no longer balanced. Some had dozens of members, some were left with only one or two. This was clearly visible on the Miro board with some areas very active and some completely dead.

My big lesson from this was that it’s critical to manage participant expectations better. Prompting people in emails is not obviously not good enough. Perhaps, during registration, ask people to select if they want to actively work with others, or just observe. People who select the first option would get the teleconference link, and people who select the second would get the YouTube stream link.

Do a warm-up exercise

Another key thing I would do differently is introduce another task, perhaps very short (2-3 minutes), for participants to form groups. The first exercise required people to switch from passive listening to active collaboration, meet two dozen new people quickly, form an effective group, find their place on Miro, and do the task I set up for the exercise. This was clearly too much. People were reluctant to speak immediately, they had audio/video turned off, and they weren’t ready to just jump in.

Assuming some kind of drop-off will be unavoidable anyway, as some people won’t read the announcements, next time I would introduce an initial warm-up exercise where people will get a trivial task, for example introducing themselves to the group and writing something on the Miro board. This will help form groups, iron out technical issues, and cause the drop-off for people who want to stay passive before the real work starts.

A happy accident is that several friends who are facilitators or coaches in their day jobs attended the webinar, so they took it upon themselves to help their group get moving quickly. Next time, if at all possible, I would probably try to place one such insider into every group, to help kick things off. Again, with Zoom it’s not feasible to do this with the way room assignments work now, so an alternative tool would probably do a better job. (Yes, I know this is in theory possible to manually reassign people, but try finding the co-host quickly in the list of 500 participants before opening rooms…)

Live-stream for the passive audience

Another happy accident was organising the YouTube livestream. We originally got worried that too many people would show up, and we’d exceed the Zoom meeting capacity, and Jordan set up the YouTube livestream as a backup for the overflow. Some people who did not want to participate actively switched to YouTube instead. But we didn’t really do anything specific for that audience. During the presentation blocks, the content there was as good as in the main meeting, but during group work this must have looked silly. It was just a quiet screen with a single static slide. The second day, Jordan was roaming around the Miro board during exercises and streaming it to YouTube, in order to at least provide some moving images.

Next time, I would try to make the YouTube overflow stream much more active. Perhaps, during exercises, we could have co-facilitators discussing or commenting on the ongoing work, or provide some individual assignments for people watching the stream.

Also, I would definitely announce the streaming link upfront for people who just want to watch, so there’s no big shift in membership once the exercises start. Instead of just keeping it as a backup plan, actively promote it as an option for attending the session.

Thanks to Dejan Dimic, David Evans, Cirilo Wortel and Christian Hassa for providing feedback.

Cover photo by Adam Whitlock on Unsplash.